For those of you who just recently got an HD TV be aware that you’re already behind the times according to what many manufacturers are up to.

First let me give a 50,000-foot overview of the technology issues.

Since roughly 1942 until the mid-2000’s, NTSC (as the standard in the US was called) dominated the TV landscape. That’s 60 years of one standard and the biggest change that happened during that time was a comparatively simple change from black-and-white to color (the resolution stayed the same).

The inertia built up over this time was huge. Adopting a totally new format would be no easy matter and history has shown that to be the case. HD TV has taken quite some time to gain a foothold but now it’s quickly becoming the standard for all TV broadcasts. It brought wider screens and higher resolutions. These both make sports and movie-watching far more enjoyable.

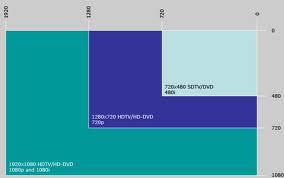

The old resolutions were known as 480i. The “i” stands for “interlaced” which is actually a bit of optical trickery as an interlaced image only displays half the image (240 rows) at a time and switches between them so fast that your eye perceives a single image of 480 rows. Then came 480p. The “p” stands for “progressive” and that means you’re seeing all 480 rows of image at a time. DVD players proved that 480p was preferable and presented a more “realistic” image than 480i.

The first steps into HD included the 720p resolution (or 720 rows of image) and then, quickly behind that came 1080i. Hey, why the “i” again? We got back into interlaced images simply as a matter of bandwidth. A regular standard definition 480p image uses 337,920 pixels to create a single image. Jumping to just 720p increases that number almost 3 times to 921,600 pixels. That’s a lot more data to send. When you get to 1080i that number (and remember, this is for just half the image) jumps to 1,036,800 pixels. Today’s default high-end of 1080p uses 2,073,600 pixels for every single image. So, the jump from 480i to 1080p requires a bandwidth increase of more than 12 times what it used to take to send a single image. That’s 12 times the space and 12 times the data to send out over the air or through a cable.

The first steps into HD included the 720p resolution (or 720 rows of image) and then, quickly behind that came 1080i. Hey, why the “i” again? We got back into interlaced images simply as a matter of bandwidth. A regular standard definition 480p image uses 337,920 pixels to create a single image. Jumping to just 720p increases that number almost 3 times to 921,600 pixels. That’s a lot more data to send. When you get to 1080i that number (and remember, this is for just half the image) jumps to 1,036,800 pixels. Today’s default high-end of 1080p uses 2,073,600 pixels for every single image. So, the jump from 480i to 1080p requires a bandwidth increase of more than 12 times what it used to take to send a single image. That’s 12 times the space and 12 times the data to send out over the air or through a cable.

What’s the answer to that problem? Compression. Compression is another optical illusion that we’ve all experienced. Compression takes a single image, looks for similarities between pixels and then drops those pixels that are similar in nature. The end result is an image that looks pretty much the same to our eye but at a much lower cost in bandwidth and space.

Blu-ray players provide a very nice image and most consumers are unaware that the image they’re seeing is actually being heavily compressed to save on space. Broadcasters use even more compression as space isn’t their problem but bandwidth is. All anyone needs to do is watch any HD scene with a lot of moving water (like a movie taking place on a rough river) and you’ll see what I mean. The water will look blocky and lacking in detail. In fact, such scenes generally look far more realistic in standard definition (without compression) than they do in HD. The catch is that such scenes are often very short so few people notice.

Services like Netflix depend on extreme compression technologies to pull off their business models. Imagine having to “broadcast” literally hundreds of thousands, or millions, of HD shows all at the same time. The overhead is just enormous. Netflix already accounts for more than 30% of total bandwidth usage every night during prime-time viewing hours in the US. In other words, one out of every three pieces of data being sent to consumers each night is from Netflix—and that’s with heavily compressed data. Other estimates suggest it’s as high as 65% of nightly Internet traffic.

For those of us who are sensitive to such trade-offs the reality is quite trying. I love Netflix but I find the image quality to be rather awful for most shows. Whenever the camera pans (moves side-to-side) all I see is a choppy mess. When it’s not panning I see lots of digital artifacts caused by heavy compression (the image looks splotchy).

Netflix and broadcasters are struggling to keep up with demand. There are still many channels that broadcast only in standard definition but consumers continue to push them forward—for better or worse. For me, I’d just like things to settle in for quite a while and let the bandwidth and space issues decrease so that compression can be reduced or eliminated. I suspect the perceptual benefits will be dramatic to many once they can see the difference before and after.

The problem for manufacturers is that they liked what happened when consumers finally decided to go all-out for HD. TV sales skyrocketed and prices fell with the sales creating a whirlpool effect until just recently when new TV sales suddenly bottomed out. Most everyone who wanted an HD TV now has one (or more). The only thing manufacturers can do is impress you with more features like 3D TV. Not surprisingly, it’s not anywhere near as compelling as the move from standard definition to HD. It’s just too complicated.

So manufacturers decided that the real solution is to try to repeat the enthusiasm of the increased resolution by offering up so-called 4K TV’s. They’re called this because they’re more or less 4,096 pixels wide. This is roughly twice as wide as a 1080p image which is actually 1,920 pixels wide (the 1080 refers to height so it’s 1,080 pixels high). Thus one typical 4K TV uses 8,631,360 pixels for for a single image or more than 4 times the bandwidth and space of a 1080p image. Note I said “typical” as currently there isn’t a unified standard for 4K yet. As consumers we all just love it when the standards aren’t set and we have to lay our money down. Remember Blu-ray vs. HD DVD or VHS vs. Betamax? Here we go again.

The issues with 4K TV’s are not trivial. First of all, there’s the issue of content. A 4K TV wouldn’t make much sense without any native 4K content to watch just as a 3D TV isn’t much use without 3D content to watch. Right now, years after the adoption of HD, we still have standard definition channels that seem years away from making the switch to HD. We still have disc-based players that use compression and broadcasters and streaming services that use extreme compression in an effort to keep up. We are nowhere near ready for a “standard” that suddenly jumps everything four-fold. It’s a recipe for disaster.

What’s more is that the actual visual benefits are highly suspect. The ability to perceive the difference between standard definition and HD is one thing. Seeing the difference between 1080p and 4K in your family room, at normal seating distances, is entirely another. You’d have to have a TV roughly twice the current size to appreciate the differences. Think about it. That’s pretty much what we all did the first time around. We had 27″ TV’s and jumped to 56″ sets or something similar. Are we suddenly going to try to fit 120″ TV’s in our family rooms and sit 10 feet away from them? Okay, I might but I’m crazy that way.

The only place 4K makes any sense to me is in the movie theater that’s currently using digital projectors. Those projects are great but they’re only marginally higher resolution than 1080p and on those huge screens you can make out the individual pixels at times. Moving to 4K for theaters would likely eliminate that once and for all but that’s a much different situation than your family room.

And if you think this push to 4K is a bit much and a bit too soon then you’ll love to know that 4K is nothing compared to what’s behind that. The BBC set up something called Super Hi-Vision at the Olympics where they showed a 33K live feed of the action in the Aquatics Center. That’s another 8-fold increase from 4K. I can just see that 480″ (40-foot) TV in my basement already!